- June 27, 2024

- 6 min read

Building AI-Powered Web Applications In 20 Minutes with FriendliAI, Vercel AI SDK, and Next.js

With the development of AI technologies it has become much easier for anyone to build AI applications. By leveraging the AI solutions and the diverse AI models that many companies provide and maintain, even without expert knowledge, people can create powerful AI applications like:

- Educational chatbots

- Image generation tools

- Language translation services

- Personalized recommendation services

In this tutorial, we will walk you through building your own AI playground where you can experiment with Go code and learn Go in an interactive and an efficient way.

The goal of this tutorial is to create an easily accessible AI playground that provides functionalities such as user prompts and system prompts via a web interface.

We will also demonstrate its usage by building an application that generates and fixes arbitrary Go code to help users learn and easily write Go code using large language models (LLMs).

Key Technologies and Tools

- Friendli Serverless Endpoints: FriendliAI provides production-ready solutions for users and enterprises to facilitate the use of the Friendli Inference, which brings speed and efficiency (i.e., for both resources and cost) for managing and serving various large language models (LLMs). Friendli Serverless Endpoints leverages the Friendli Inference to serve popular general-purpose open-source large language models like Llama-3 model, and provide them in a ChatGPT-like interface to make them easily accessible by the users.

- Vercel AI SDK: is a powerful open-source library that helps developers build conversational streaming user interfaces in JavaScript and TypeScript. Vercel AI SDK simplifies AI integration into web applications. It provides various tools and pre-built components that streamline the development process, reducing the time and effort needed to implement advanced features.

- Next.js: is a popular open-source framework for React developed by Vercel. It helps build fast, scalable, and user-friendly web application. Features like server-side rendering, static site generation, and build-in API routes make it simple for modern web development.

Through this guide, you will be able to learn how to:

- Build an interactive playground where people can write and test Go code in real-time.

- Develop a web application that leverages LLMs for user interaction.

- Explore a practical example of using LLMs by creating an application that corrects and improves arbitrary Go code.

Let's get started!

We will use Friendli Serverless Endpoints for LLM inference and use Next.js along with the Vercel AI SDK for the frontend.

Setting Up the Project and Installing Dependencies

- Preparing Your Token: Get a Friendli Suite Personal Access Token (PAT) after signing up at Friendli Suite.

- Initializing the Project: You could start by using the default template from Next.js to initialize a project named

play-go-ai. For instance, simply use the following commands:

You will find a new directory named play-go-ai with a pre-configured Next.js application that uses TypeScript, Tailwind CSS, and ESLint.

- Installing the Dependencies: Next, you will need to install the required dependencies for the Vercel AI SDK along with some other necessary packages to create the web interface. Note that Friendli Serverless Endpoints is compatible with the OpenAI API and is available through the

@ai-sdk/openaimodule. You can install these dependencies using the following command:

- Configuring the Environment Variables: To use Friendli Serverless Endpoints, you'll need to place your Personal Access Token (PAT) in a

.env.localfile. Create this file at the root folder of your project and add your token as shown below. In your actual environment, be sure to fill in your own PAT as theFRIENDLI_TOKENvariable.

Setting up a Basic Project Based On Vercel AI SDK

- Choosing a model: Selecting the right model for your application is crucial. FriendliAI offers various open-source generative AI models for easy integration, with additional benefits such as GPU-optimized inference.

In this tutorial, we’ll use meta-llama-3.1-8b-instruct, one of the latest LLMs introduced by Meta. With 8 billion parameters, it excels at understanding and generating language, providing high-quality and detailed responses. This makes it suitable for a wide range of applications, from simple chatbots to complex virtual assistants.

If any of the models do not meet your application’s requirements, you also have the option to use customized or fine-tuned models through Friendli Dedicated Endpoints. For more options, explore our model library.

- Creating the Completion API Route File: Start by creating an API route using the OpenAI API. Let's create a new file named

route.tsinside theapp/api/completiondirectory, that looks like this:

- (Optional) Team ID Configuration: You can optionally configure the request by including additional headers, such as the team ID. This ensures that only members belonging to the specific team will be able to access the requests. Here's how you can do it:

- Setting Up the User Interface: Now, let’s generate the actual web page that the user sees. We will create an input box for typing in our prompts and a textarea to display the generated results. Add the following code to the

app/page.tsxfile

- Running the local server: Finally, you can run the local server using the following command:

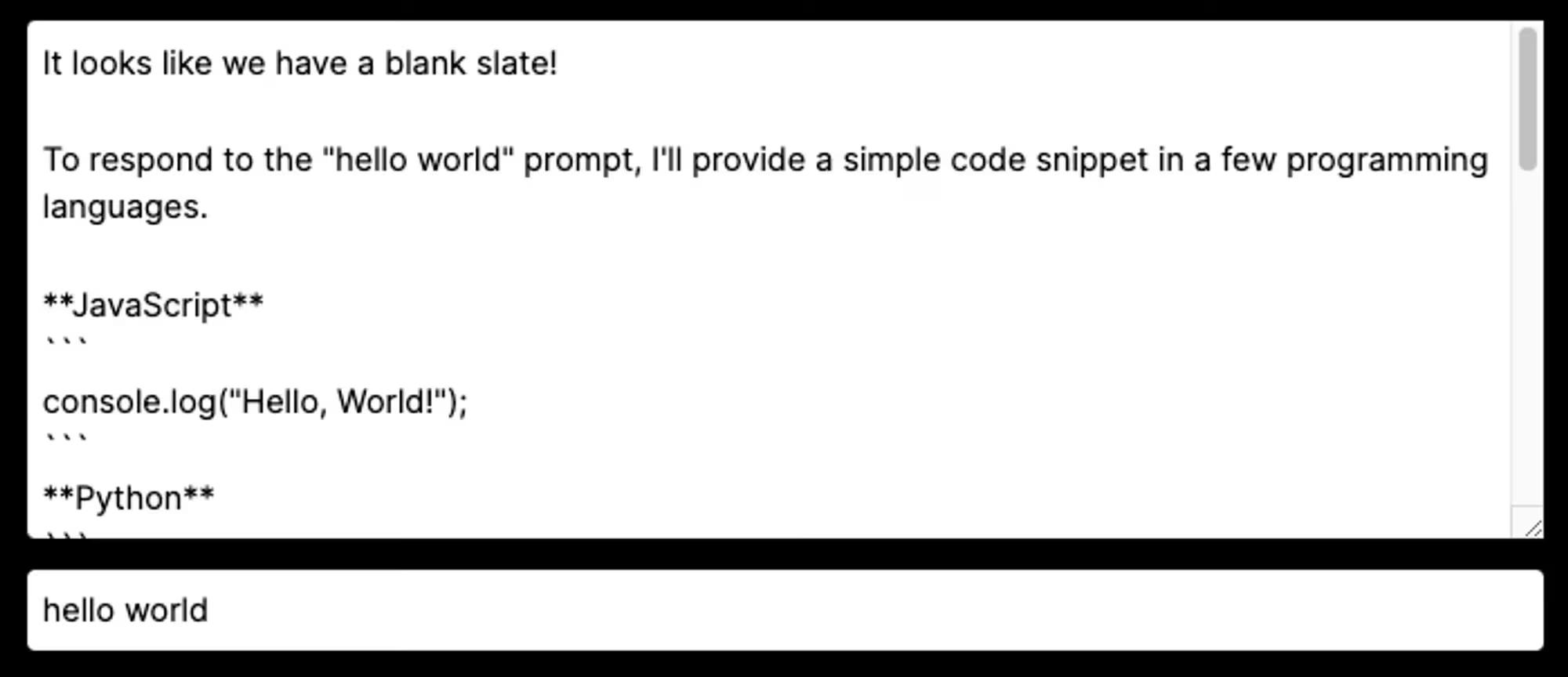

This will start the local development server, and you'll see a simple interface similar to the one shown below.

Enhancing Code Generation and Execution

Now that we know that we can use simple prompts for our code completion, let's add a system prompt to the mix. This will allow us to guide the model's response to better suffice our needs.

We can add this system prompt to our code by modifying the app/api/completion/route.ts file. Specifically, we'll update the streamText function to include the system prompt:

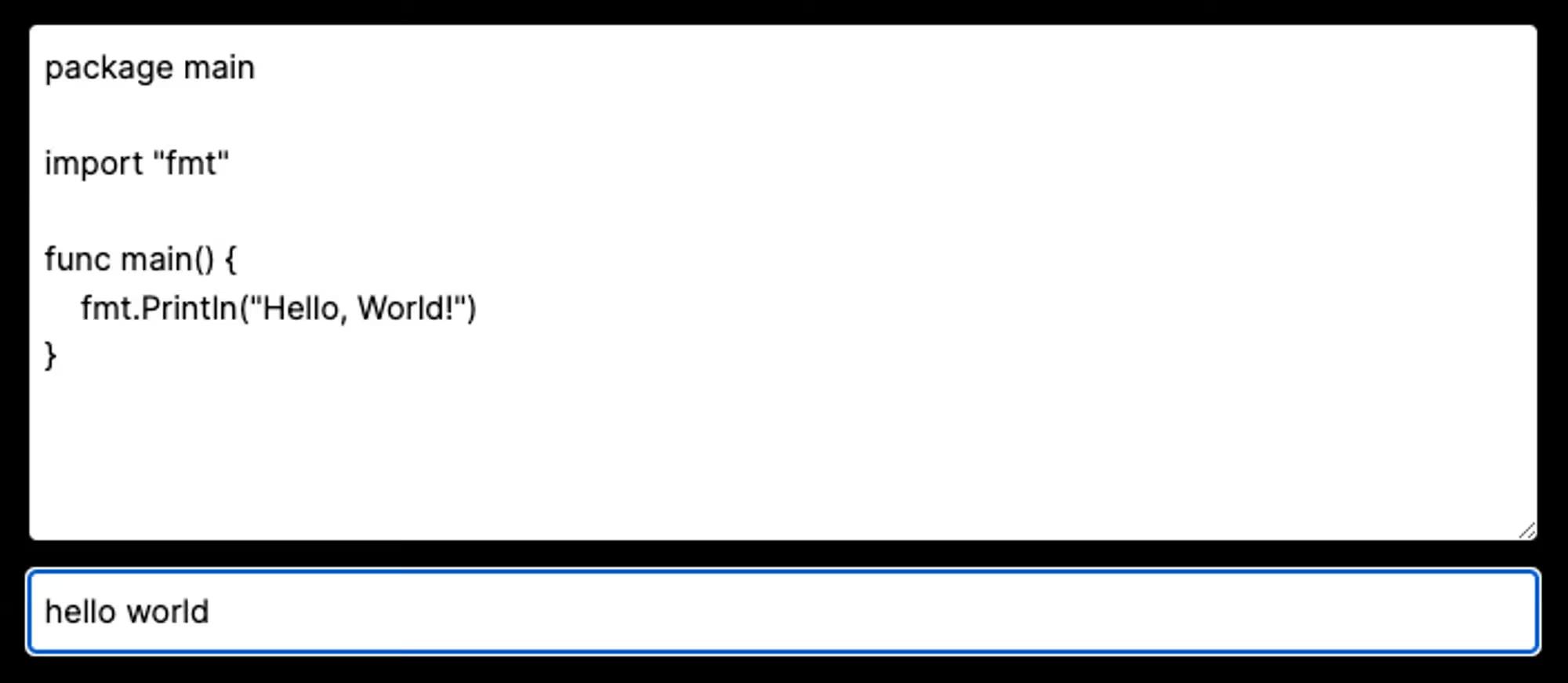

Now, let's go back to the browser and continue our conversation. Comparing the before & after for adding the system prompt, we can see that the generated responses are now more structured and adhere strictly to the instructions given, providing us with clean and precise Go code edits, as shown below.

|  |

|---|---|

| Before adding the system prompt | After adding the system prompt |

A lot has changed: the code generated by the LLM now looks like it's actually executable. Therefore, let's now add the ability to execute the code through our web interface. For the code execution, we'll use the unofficial API provided by go.dev/play.

To use it, we'll create a new server router in Next.js, by adding the code below into the app/api/code/route.ts file.

Now that we have created a server endpoint where we can format and run our code to see the results, let's add some code to our pages.tsx file to utilize it.

As you can see above, we have added some UI for the compile server and now the generated code can be arbitrarily edited by the user. You can now send a request to the code/route.ts server that you have just added to actually execute your Go code! At this stage, we have completed creating the basic elements of our Go playground.

Let's make one last change here. We want to be able to feed the LLM with the results of our code execution as well as the error outputs. This will allow us to continuously work on the generated code and add more functionality to the program we've created.

Update page.tsx to:

Update completion/route.ts to:

If there is an error from the playground response, the prompt field combines the prompt, code, error, and result fields into a single string.

After this change, all of your work will flow continuously. Your playground will now be able to continuously edit, and improve Go code based on ongoing prompts, execution results, and error messages.

You can check the demo, with additional loading states and design assets, at play-go-ai.vercel.app.

An example on the demo application generating Go code for printing out the numbers of the fibonacci sequence, generating comments to explain the code, then executing the code to test and check out the result of the code.

You can also check the source code of the demo project above and deploy the demo on your own, by referring to this GitHub repository.

Summary

In this tutorial, we have been able to develop a simple AI application using the Vercel AI SDK and the Friendli Serverless Endpoints. As suggested, we have been able to see that the project can be easily deployed and operated within minutes by using Vercel and FriendliAI. Furthermore, if you wish to use custom or fine-tuned models for your applications, you can also build your applications using Friendli Dedicated Endpoints, which lets users upload their own models on the GPUs of their choice, to serve and expose the model as similar endpoints as in the example in this tutorial.

Congratulations on completing this tutorial! We hope that you found it informative and useful.

Happy coding!

Written by

FriendliAI Tech & Research

Share

General FAQ

What is FriendliAI?

FriendliAI is a GPU-inference platform that lets you deploy, scale, and monitor large language and multimodal models in production, without owning or managing GPU infrastructure. We offer three things for your AI models: Unmatched speed, cost efficiency, and operational simplicity. Find out which product is the best fit for you in here.

How does FriendliAI help my business?

Our Friendli Inference allows you to squeeze more tokens-per-second out of every GPU. Because you need fewer GPUs to serve the same load, the true metric—tokens per dollar—comes out higher even if the hourly GPU rate looks similar on paper. View pricing

Which models and modalities are supported?

Over 380,000 text, vision, audio, and multi-modal models are deployable out of the box. You can also upload custom models or LoRA adapters. Explore models

Can I deploy models from Hugging Face directly?

Yes. A one-click deploy by selecting “Friendli Endpoints” on the Hugging Face Hub will take you to our model deployment page. The page provides an easy-to-use interface for setting up Friendli Dedicated Endpoints, a managed service for generative AI inference. Learn more about our Hugging Face partnership

Still have questions?

If you want a customized solution for that key issue that is slowing your growth, contact@friendli.ai or click Talk to an engineer — our engineers (not a bot) will reply within one business day.