Running OpenAI, Anthropic, or open models like GLM-5, Qwen, DeepSeek, or Llama elsewhere? Switch to Friendli Inference for better performance and lower costs—with minimal changes to your stack.

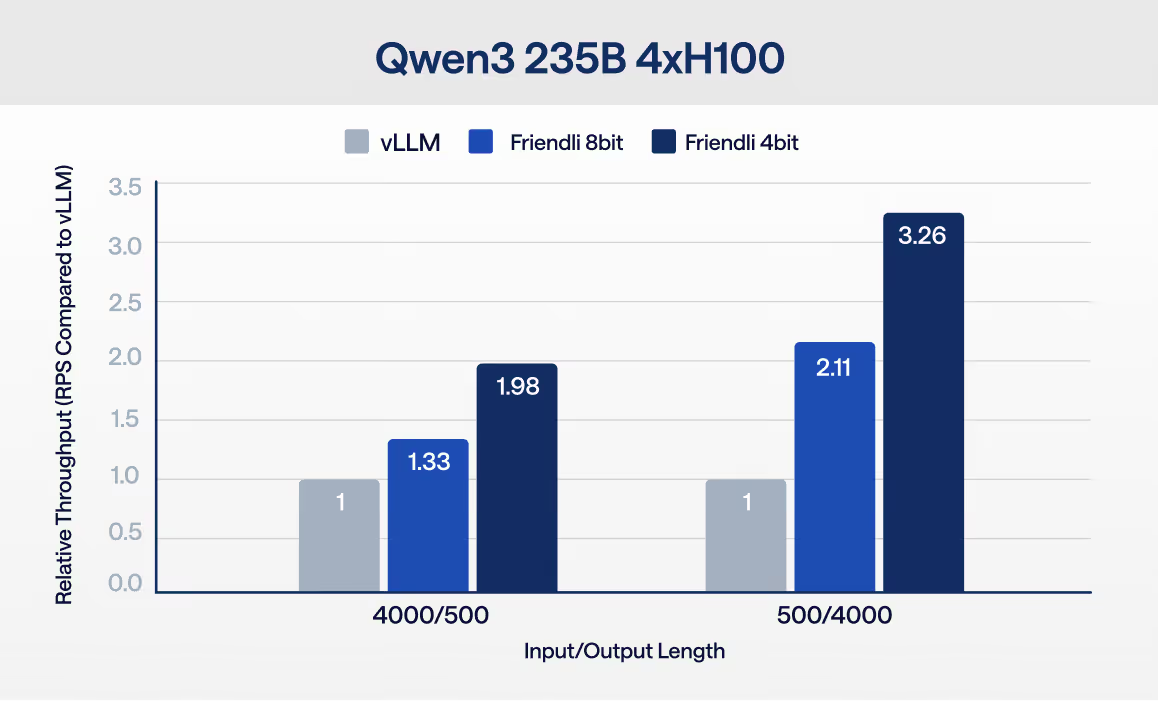

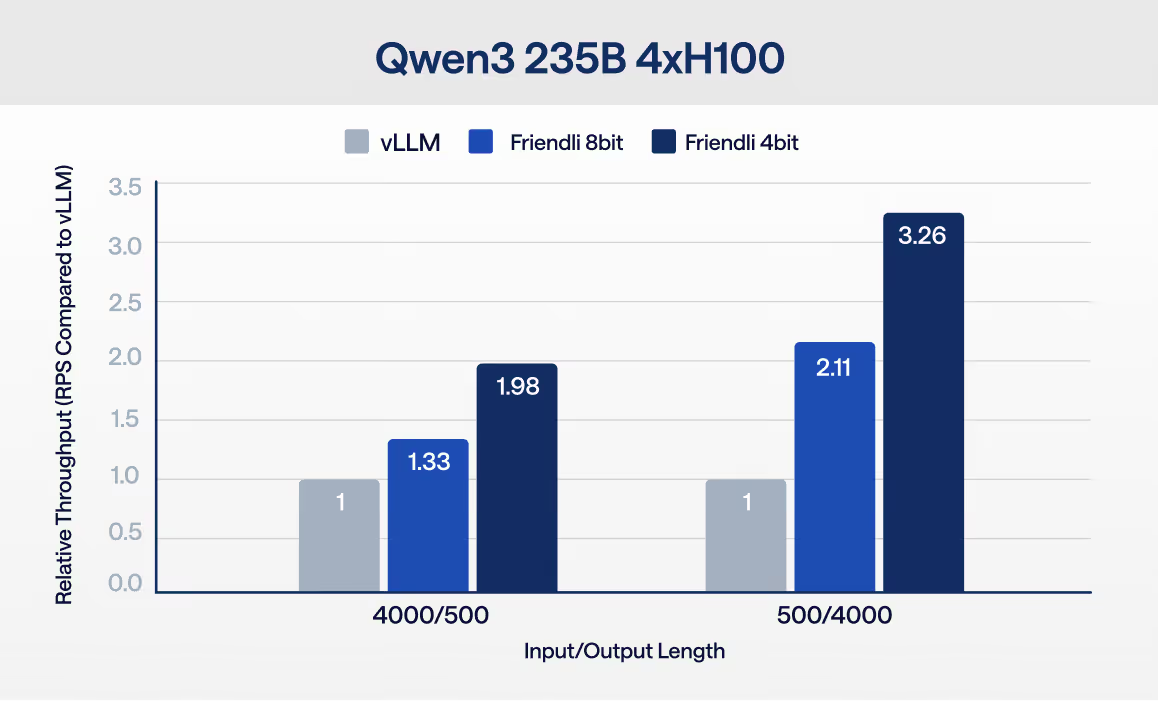

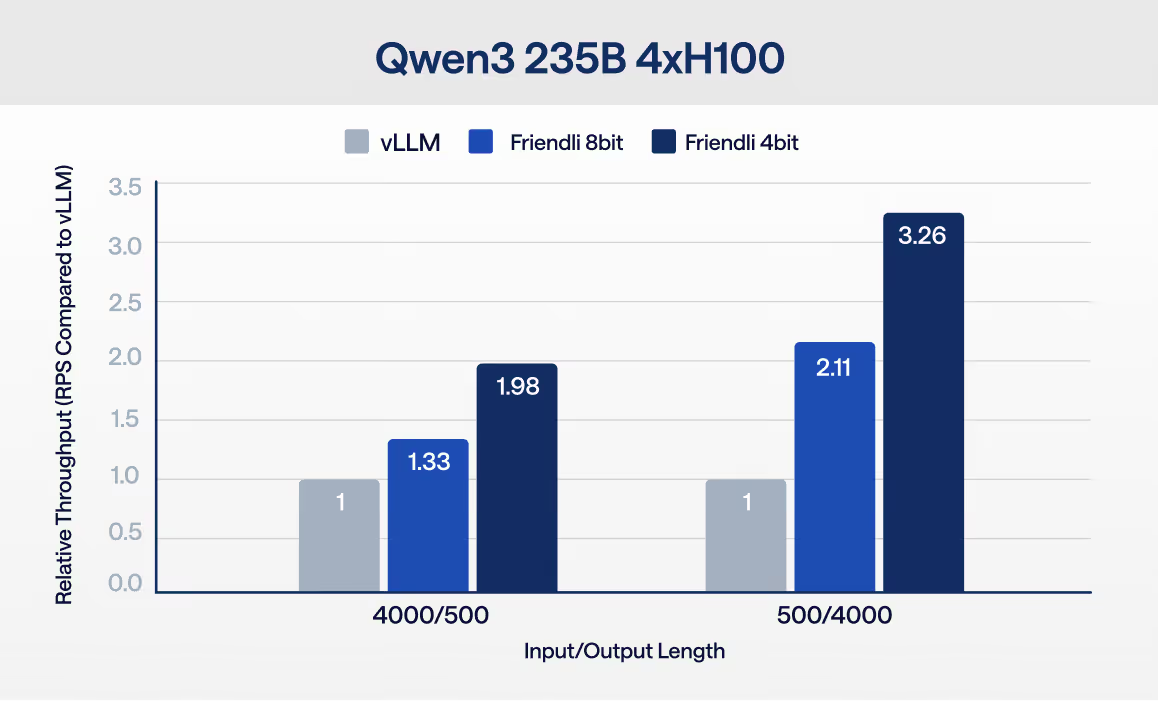

Model API costs rise quickly at scale. FriendliAI is an inference platform that helps teams switch to open models with lower latency, higher throughput, and 20–40% lower inference costs—without changing their application.

First

Submit the form with your details and current provider bill

Second

We review and approve your credit amount

Third

Start running inference on FriendliAI using your credits

Get up to $50,000 in inference credits when you move to FriendliAI.

Credits subject to review and approval. Offer available for a limited time.