- October 8, 2022

- 2 min read

Serve generative AI models like T5 faster than ever with Friendli Engine (32.8x faster for T5–3B)

In our previous blog posts (#1, #2), we showed the performance gain of Friendli Engine (aka PeriFlow or Orca) on GPT3, a popular generative AI model. Orca consistently outperformed Triton + FasterTransformer on models of various sizes, all the way from 345M, 1.3B up to 175B parameters. GPT is a decoder-only model. Today, we will be looking at the performance of Friendli Engine on Google’s T5, which is a Transformer-based encoder-decoder model. T5 is widely used in machine translation.

T5, or Text-To-Text Transfer Transformer, is a neural network model that can convert practically any language task into a text-to-text format. It differs from models such as BERT (encoder-only) or GPT (decoder-only) in that it incorporates both the encoder and the decoder component into its architecture.

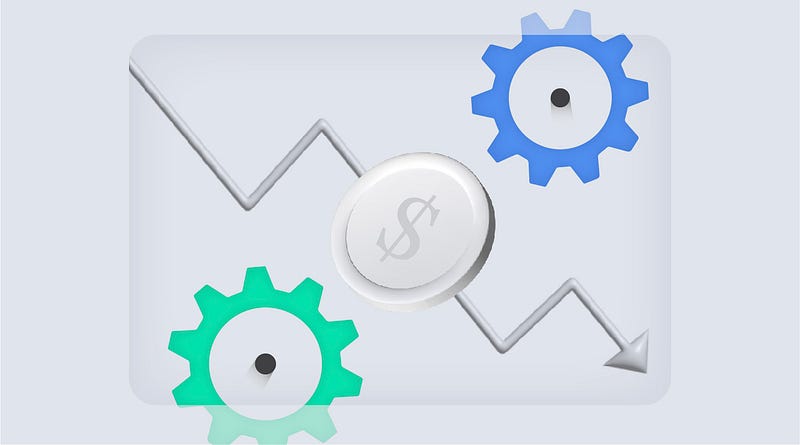

We ran our evaluation on NVIDIA A10G GPU with a T5 model with 3B parameters. The below figure shows throughput and mean normalized latency. Since each request in the trace requires different processing time, which is (roughly) in proportion to the number of generated tokens, we report mean latency normalized by the number of generated tokens of each request.

At the same latency level of 24ms/token, Orca has 32.8X higher throughput than NVIDIA Triton Inference Server with FasterTransformer as its backend engine.

Orca provides significantly higher throughput and lower latency than Triton + FasterTransformer. Notably, as the load becomes heavier, Orca yields higher throughput with a relatively small increase in latency.

Regardless of the Transformer model architecture, whether it is a decoder-only or an encoder-decoder model, Orca continues to outperform existing serving systems.

*Orca is a product developed by FriendliAI. We provide Friendli Suite, an end-to-end AI development and serving service, which offers highly optimized engines (e.g., Orca) for Transformer models. For more information, check the link.

Written by

FriendliAI Tech & Research

Share