Latest post

- April 12, 2024

- 3 min read

Easily Migrating LLM Inference Serving from vLLM to Friendli Container

Read full article

- April 12, 2024

- 3 min read

Easily Migrating LLM Inference Serving from vLLM to Friendli Container

Read full article

- April 8, 2024

- 3 min read

Building Your RAG Application on LlamaIndex with Friendli Engine: A Step-by-Step Guide

RAG

LlamaIndex

- April 3, 2024

- 6 min read

Improve Latency and Throughput with Weight-Activation Quantization in FP8

WAQ

FP8

- February 28, 2024

- 3 min read

Running Quantized Mixtral 8x7B on a Single GPU

Mixtral

AWQ

- February 20, 2024

- 4 min read

Serving Performances of Mixtral 8x7B, a Mixture of Experts (MoE) Model

Mixtral

MoE

- February 15, 2024

- 4 min read

Which Quantization to Use to Reduce the Size of LLMs?

AWQ

Quantization

LLM

- February 7, 2024

- 2 min read

Friendli TCache: Optimizing LLM Serving by Reusing Computations

LLM

Serving

- February 2, 2024

- 4 min read

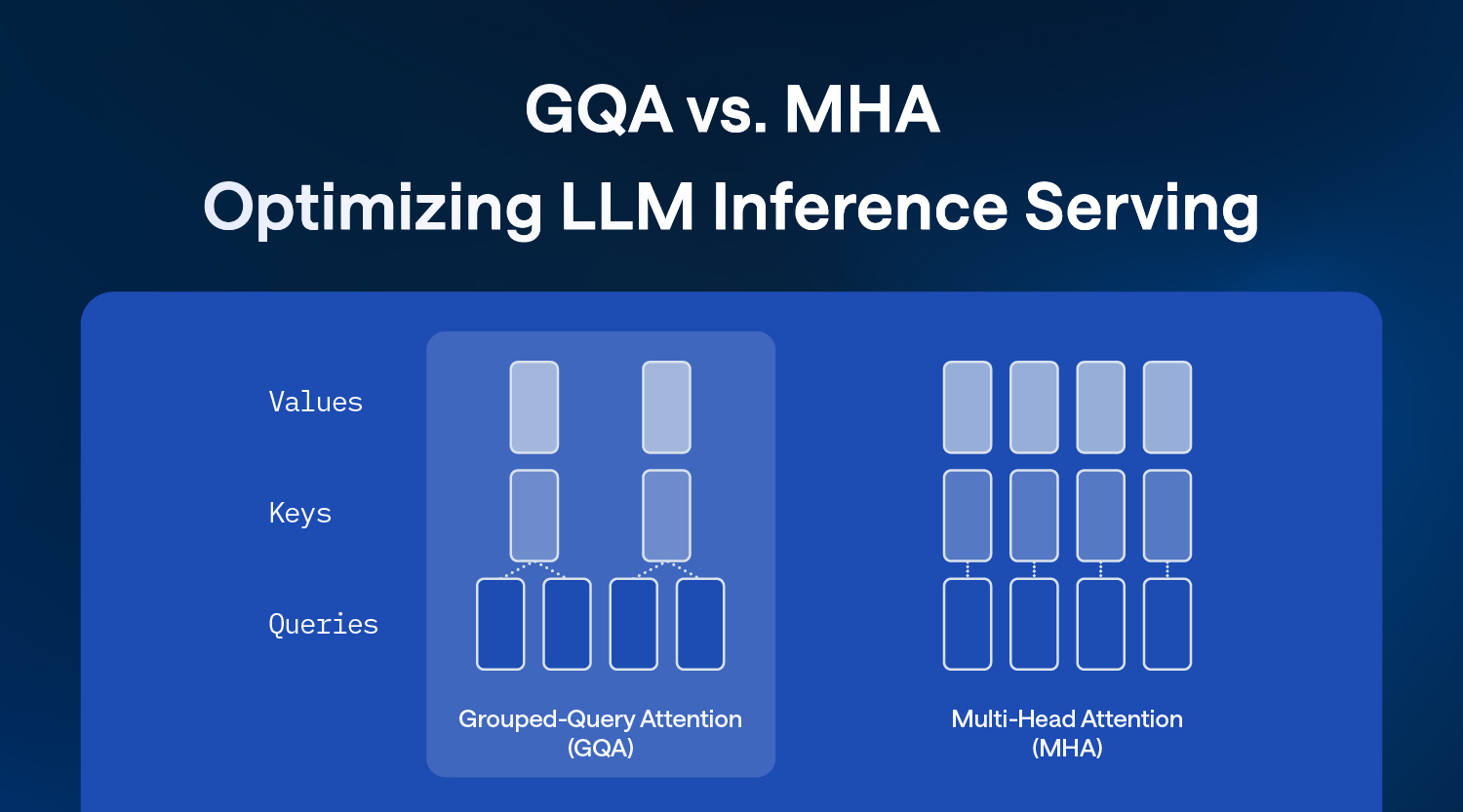

Grouped Query Attention (GQA) vs. Multi Head Attention (MHA): Optimizing LLM Inference Serving

GQA

MHA

MQA

- January 24, 2024

- 3 min read

Faster and Cheaper Mixtral 8×7B on Friendli Serverless Endpoints

LLM

Serving

- January 12, 2024

- 3 min read

The LLM Serving Engine Showdown: Friendli Engine Outshines

LLM

Serving Engine

- January 4, 2024

- 2 min read

Friendli Serverless Endpoints: Unleashing Generative AI for Everyone

inference

generative AI models

- December 11, 2023

- 3 min read

Groundbreaking Performance of the Friendli Engine for LLM Serving on an NVIDIA H100 GPU

LLM

NVIDIA H100

- November 16, 2023

- 3 min read

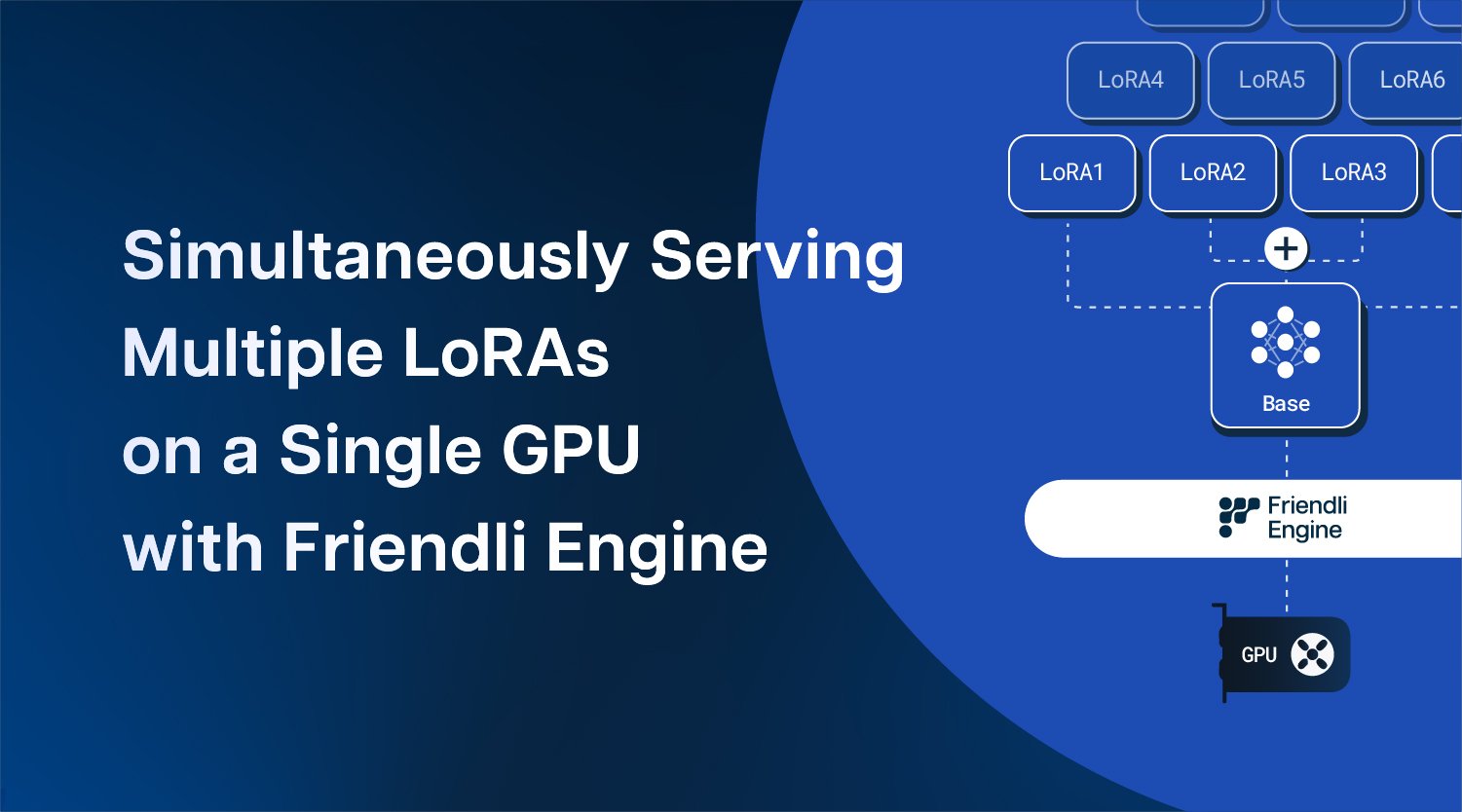

Simultaneously Serving Multiple LoRAs on a single GPU with Friendli Engine

LoRA

multi-LoRA

- November 7, 2023

- 2 min read

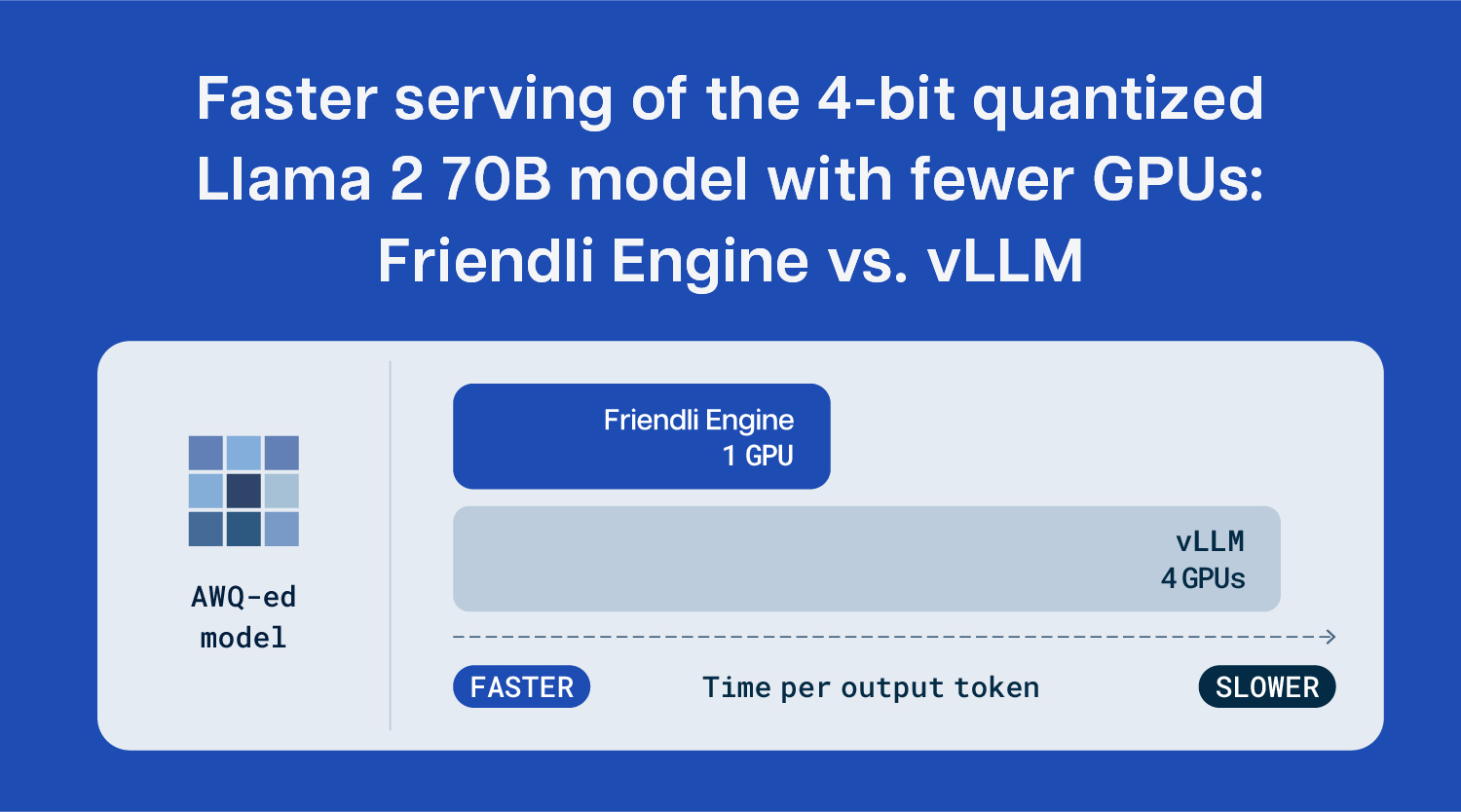

Faster serving of the 4-bit quantized Llama 2 70B model with fewer GPUs: Friendli Engine vs. vLLM

Quantization

Large Language Models

- October 30, 2023

- 3 min read

Comparing two LLM serving frameworks: Friendli Engine vs. vLLM

LLM

Inference

Serving

- October 27, 2023

- 4 min read

Chat Docs: A RAG Application with Friendli Engine and LangChain

Langchain

Large Language Models

LLM

- October 27, 2023

- 3 min read

LangChain Integration with Friendli Dedicated Endpoints

Langchain

Large Language Models

Model Serving

- October 26, 2023

- 3 min read

Retrieval-Augmented Generation: A Dive into Contextual AI

Large Language Models

Model Serving

Langchain

- October 23, 2023

- 3 min read

Unlocking Efficiency of Serving LLMs with Activation-aware Weight Quantization (AWQ) on Friendli Engine

Quantization

Large Language Models

Transformers

- October 16, 2023

- 4 min read

Understanding Activation-Aware Weight Quantization (AWQ): Boosting Inference Serving Efficiency in LLMs

Quantization

Large Language Models

Transformers

- September 27, 2023

- 2 min read

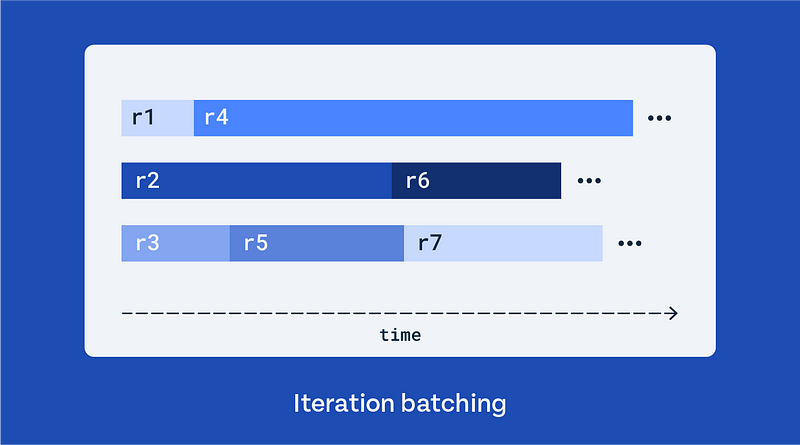

Iteration batching (a.k.a. continuous batching) to increase LLM inference serving throughput

Llm

Llm Serving

Generative AI Tools

- July 13, 2023

- 5 min read

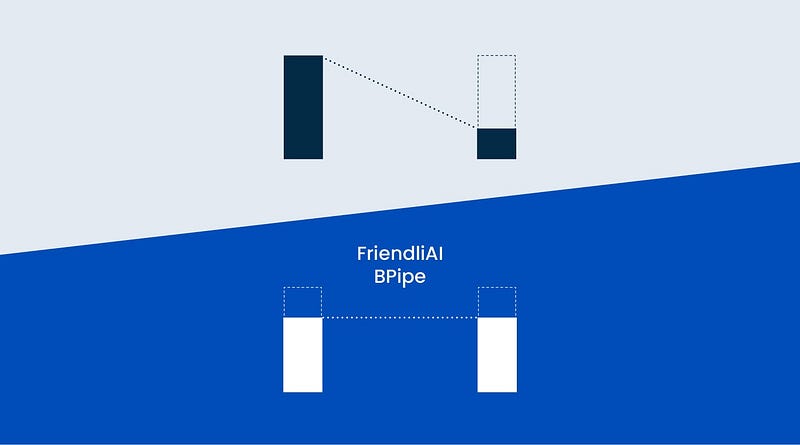

Accelerating LLM Training with Memory-Balanced Pipeline Parallelism

Large Language Models

Transformers

Distributed Systems

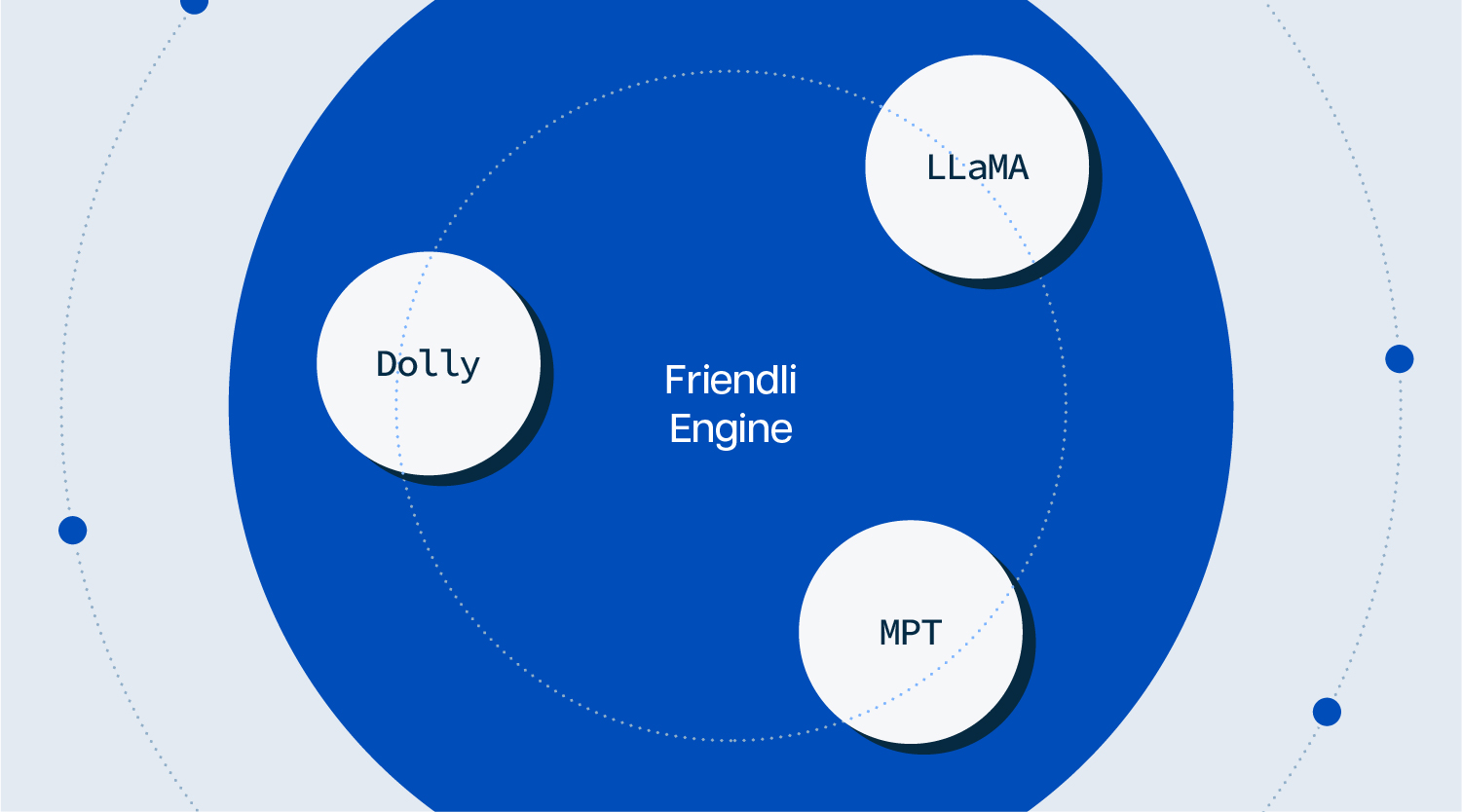

- July 3, 2023

- 2 min read

Friendli Engine's Enriched Coverage for Sought-After LLMs: MPT, LLaMA, and Dolly

Transformers

Generative Model

Large Model

- June 27, 2023

- 3 min read

Get an Extra Speedup of LLM Inference with Integer Quantization on Friendli Engine

Quantization

Transformers

Generative Model

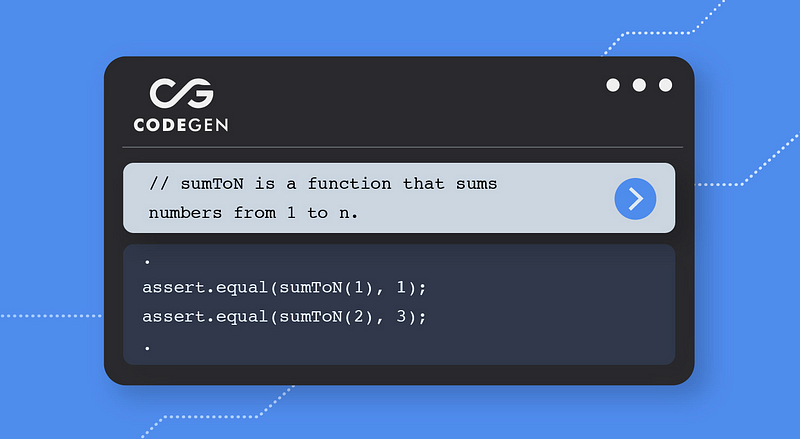

- January 17, 2023

- 3 min read

Fine-tuning and Serving CodeGen, a Code Generation Model, with Friendli Engine

Codegen

Mlops

Transformers

- November 1, 2022

- 1 min read

Save on Training Costs of Generative AI with PeriFlow

Machine Learning

AI

VC

- October 8, 2022

- 2 min read

Serve generative AI models like T5 faster than ever with Friendli Engine (32.8x faster for T5–3B)

Generative AI

Transformers

Mlops

- August 4, 2022

- 2 min read

Friendli Engine: How Good is it on Small Models?

Machine Learning

Transformers

Generative Model

- July 18, 2022

- 7 min read

Friendli Engine: How to Serve Large-scale Transformer Models

AI

Machine Learning

System Architecture

- May 20, 2022

- 3 min read

Introducing GPT-FAI 13B: A Large-scale Language Model Trained with FriendliAI’s PeriFlow

Gpt 3

Mlops

Mlops Platform