- July 3, 2023

- 2 min read

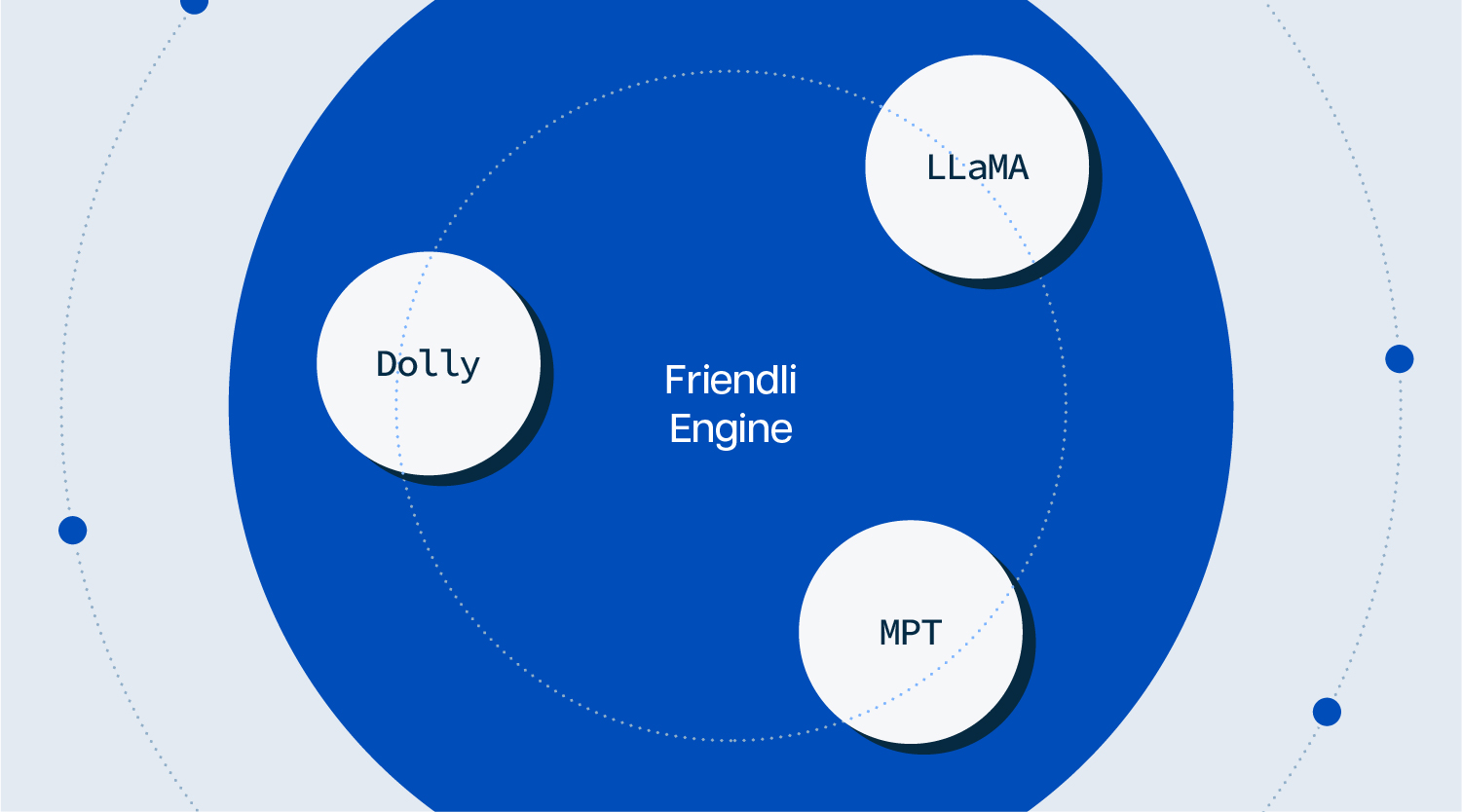

Friendli Engine's Enriched Coverage for Sought-After LLMs: MPT, LLaMA, and Dolly

We have some exciting news to share!

As you probably know, our Friendli Engine supports various LLMs, including GPT and T5. We further added support for three more highly sought-after open-source models: MPT [1], LLaMA [2], and Dolly [3].

MPT

MosaicML provides tools that streamline the process of training machine learning models and has opened-sourced LLMs recently. Recognizing its value, Databricks recently announced the acquisition of MosaicML for $1.3B [4].

MosaicML’s MPT-7B [5] and MPT-30B [1] have been trained using state-of-the-art techniques such as Alibi and FlashAttention. MPT-30B especially supports long-context inference by leveraging an 8K context window during training. Furthermore, it stands out as the first public model trained on an NVIDIA H100 cluster.

LLaMA

LLaMA stands as a collection of foundation models from Meta, providing various parameter sizes: 7B, 13B, 33B, and 65B. Remarkably, the LLaMA-13B model surpasses the GPT-3 175B model on certain tasks [2], despite having parameters an order of magnitude smaller.

The true value of LLaMA lies in its contribution to the research community — openly sharing the training methodology, including the model architecture and code. This transparency fosters a collaborative environment, where researchers can either fine-tune existing LLaMA models or create their models from scratch by adopting LLaMA’s insights. For example, Alpaca [6], Vicuna [7], Gorilla [8], and Koala [9] are fine-tuned derivatives from the LLaMA models, while RedPajama [10] is a fully open-source reproduction of LLaMA.

Dolly

Dolly is an open-source language model developed by Databricks, based on the Pythia model of EleutherAI [11]. In addition to the model checkpoint, Databricks introduced ‘databricks-dolly-15k’ [12], a new high-quality human-generated instruction dataset that played a crucial role in fine-tuning Dolly. By virtue of the new dataset, Dolly is the first open-source instruction-following language model, catering to both research and commercial applications.

In summary, Friendli Engine supports many LLMs — and can now serve MPT, LLaMA, and Dolly. Friendli Engine moreover supports various data types including fp32, fp16, bf16, and int8 (for int8, please refer to our recent blog post!), and tensor/pipeline parallelism for various serving environments. Enjoy Friendli Engine's high performance while serving LLM models like MPT, LLaMA, and Dolly!

For more information about FriendliAI, check the link.

About Friendli Engine, check the link.

[1] https://www.mosaicml.com/blog/mpt-30b

[2] Touvron, Hugo, et al. “Llama: Open and efficient foundation language models.” arXiv preprint arXiv:2302.13971 (2023).

[4] https://www.mosaicml.com/blog/mosaicml-databricks-generative-ai-for-all

[5] https://www.mosaicml.com/blog/mpt-7b

[6] https://crfm.stanford.edu/2023/03/13/alpaca.html

[7] https://lmsys.org/blog/2023-03-30-vicuna/

[8] https://gorilla.cs.berkeley.edu/

[9] https://bair.berkeley.edu/blog/2023/04/03/koala/

[10] https://www.together.xyz/blog/redpajama

[12] https://huggingface.co/datasets/databricks/databricks-dolly-15k

Written by

FriendliAI Tech & Research

Share