- November 16, 2023

- 3 min read

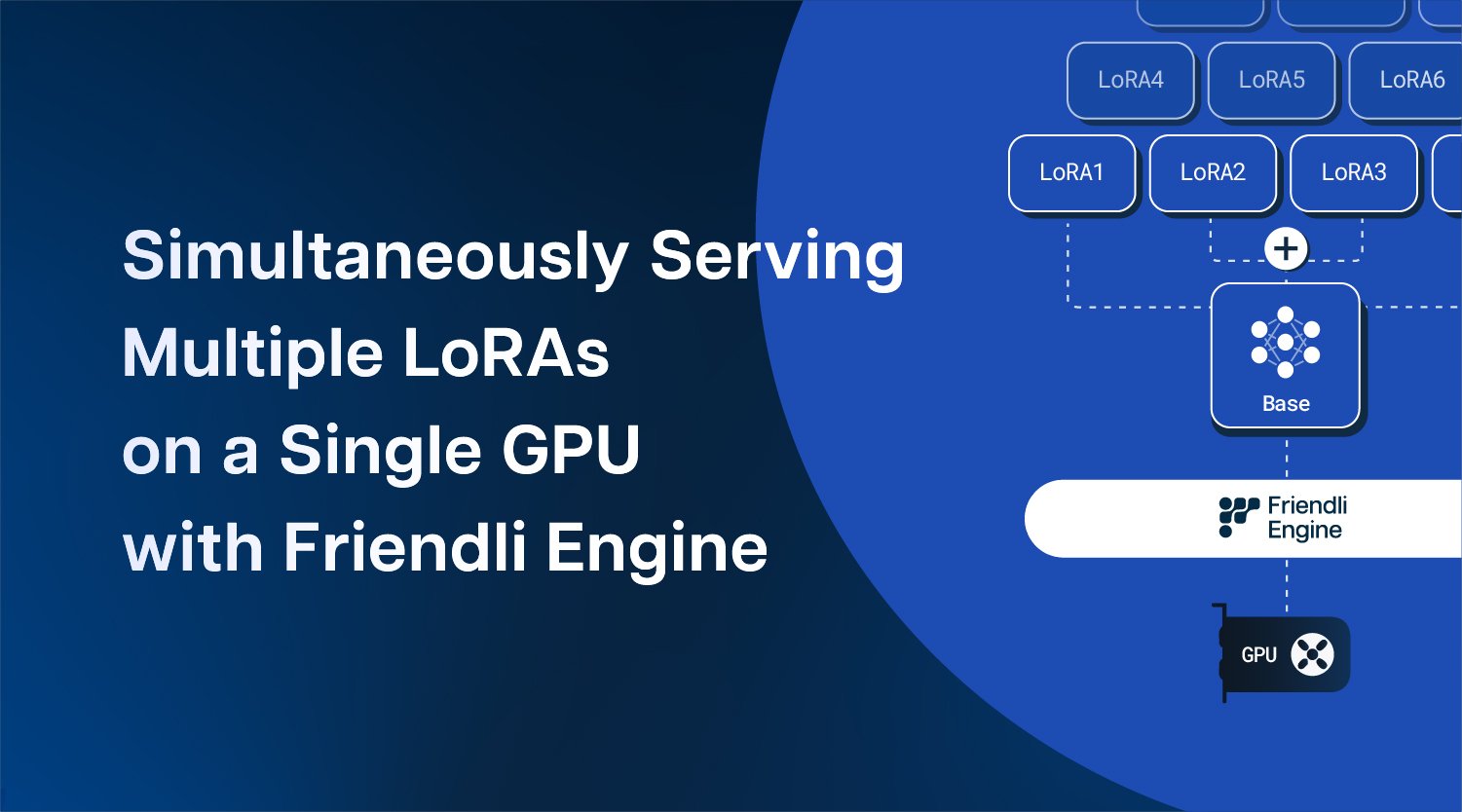

Simultaneously Serving Multiple LoRAs on a single GPU with Friendli Engine

In the ever-evolving realm of large language models (LLMs), a concept known as Low-Rank Adaptation (LoRA) has emerged as a groundbreaking technique that empowers LLMs and other generative-AI models to adapt and fine-tune their behavior with precision. In this article, we will delve into the context in which LoRA has flourished, its surging popularity driven by its remarkable flexibility and effectiveness, and the intriguing concept of serving not only a single LoRA, but multi-LoRA. Moreover, we're excited to share that FriendliAI's Friendli Engine, which pioneered iteration batching (a.k.a. continuous batching), supports multi-LoRA, even on a single GPU, revolutionizing the world of generative AI customization. Let's embark on a journey to understand LoRA and its profound significance.

The Context of LoRA: A Solution for Customization

Large language models have revolutionized the field of natural language processing, enabling applications that range from chatbots to content generation. However, they are often considered as "one-size-fits-all" solutions. In scenarios where customization and adaptation to specific tasks are essential, LLMs can be limited. This limitation has sparked the rise of LoRA.

LoRA offers a solution by introducing adaptability into the world of LLMs. It empowers users and companies to finely adjust and reconfigure the models, like the open-sourced Llama 2 model, to serve their specific needs. This adaptability ensures that LLMs can be harnessed for a diverse range of applications, making them more accessible, effective, and versatile.

The Popularity of LoRA: Flexibility and Effectiveness

The growing popularity of LoRA can be attributed to two key factors: flexibility and efficiency.

- Flexibility: LoRA provides a flexible mechanism for customizing LLM behavior. It allows users to adjust the model's parameters and adapt its responses to specific tasks or contexts.

- Efficiency: LoRA is efficient in enhancing the performance of LLMs as it does not require updating the original model. By fine-tuning models with LoRA, users can achieve task-specific improvements without the need for extensive retraining.

LoRA has become a vital tool for AI researchers, developers, and businesses seeking adaptable solutions in the ever-evolving landscape of AI applications.

Introducing Multi-LoRA Serving

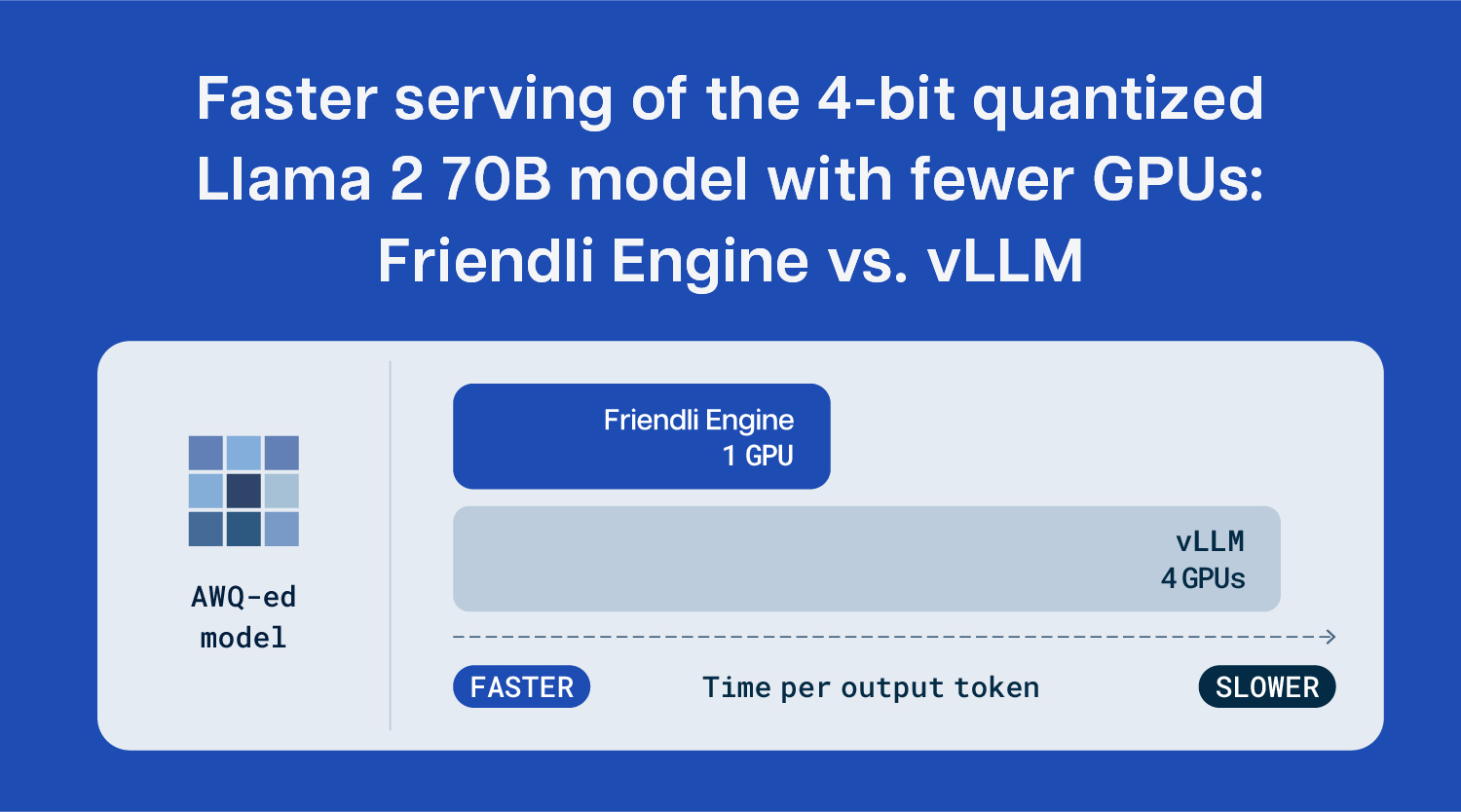

Now, let's introduce a fascinating extension of LoRA called multi-LoRA serving. While LoRA enables adaptability at the model level, multi-LoRA serving takes customization a step further. It allows LLM providers to serve multiple customized models within an efficient number of GPUs by maintaining only a single copy of the original “backbone” model weights while serving multiple LoRA adapters. Multi-LoRA serving opens the door to highly specialized and tailored AI solutions at a greater level of granularity, making it a compelling tool for applications requiring precise adjustments for each customer. However, multi-LoRA serving requires specialized optimizations, including sophisticated batching mechanisms, in order to achieve efficiency on a limited number of GPUs.

Friendli Engine: Pioneering Multi-LoRA on a Single GPU

At FriendliAI, we're dedicated to advancing the capabilities of generative AI serving. We're thrilled to announce that FriendliAI's Friendli Engine simultaneously supports multiple LoRA models on fewer GPUs (even on just a single GPU!), a remarkable leap in making LLM customization more accessible and efficient. In our next article, we'll explore multi-LoRA serving in greater depth with its practical applications, so be sure to stay tuned.

In the world of large language models, LoRA and multi-LoRA serving stand as beacons of adaptability, customization, and effectiveness. Their flexibility empowers generative AI models to serve diverse tasks, making AI more versatile and accessible than ever. With FriendliAI's Friendli Engine supporting multi-LoRA LLM serving, the possibilities are endless. Join us in our next article as we explore the fascinating world of multi-LoRA in greater detail, and discover its profound implications in LLM customization. Try out Friendli Engine today!

Written by

FriendliAI Tech & Research

Share